AI

Run Replicate models on Brev

Replicate is a model hosting and discovery platform. It has a pay-per-use plan that allows you to be billed down to the second for fine-tuning and inference tasks without having to set up your own infrastructure.

Comparing Costs

As of May 2023, Replicate's prices are as follows

| GPU | Price |

|---|---|

| T4 | $1.98/hr |

| A100 40GB | $8.28/hr |

| A100 80GB | $11.52/hr |

For similar GPUs on Google Cloud, the prices are as follows

| GPU | Price |

|---|---|

| T4 | $.44/hr |

| A100 40GB | $3.67/hr |

| A100 80GB | $6.21/hr |

Since Replicate is pay-per-use, some calls may incur a cold start cost increasing latency. If doing a lot of predictions or fine-tuning, it may make sense to run your models on always-on dedicated infrastructure like Google Cloud, AWS, or Lambda Labs. Typically running your models on cloud VMs can be a bit of a headache, as you have to deal with VPCs, firewalls, machine images, instance types, quotas, key pairs, and different kinds of volumes, file systems, operating systems, driver versions, not to mention instance availability. Luckily, we’ll be using two tools to simplify all of this: Cog and Brev. Cog simplifies running production-ready containers for machine learning. Brev finds and provisions AI-ready instances across various cloud providers.

Pre-requisite

Create a Brev.dev account with payment or a cloud connect

Find the model and Cog Container on Replicate

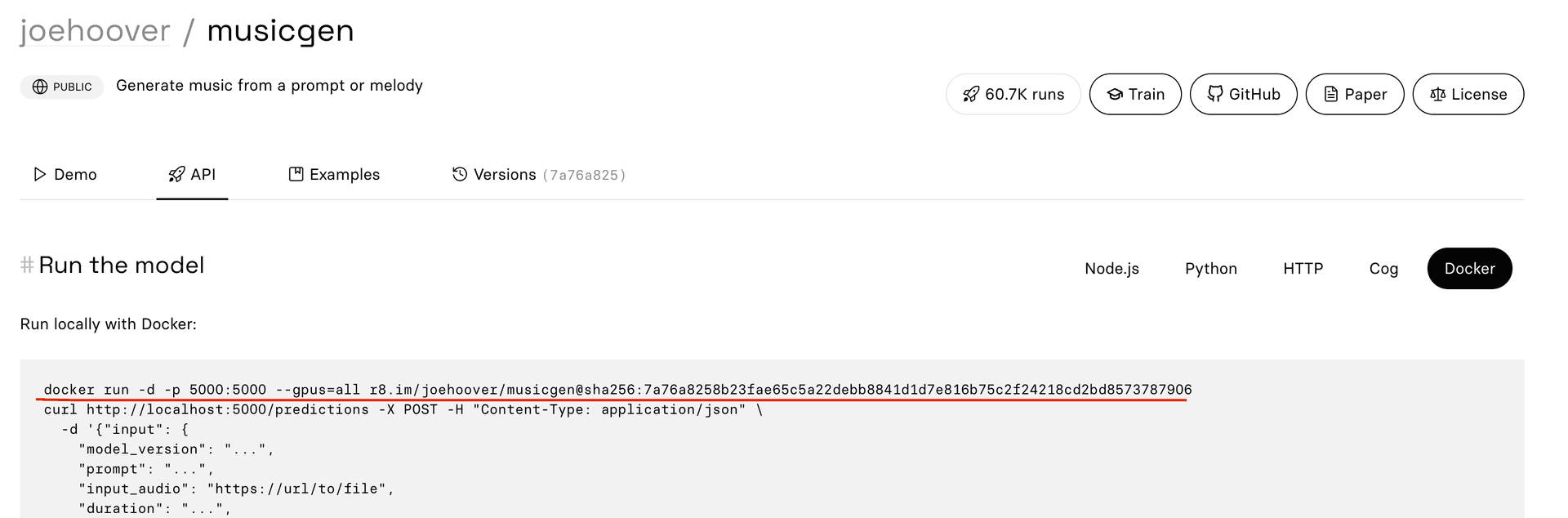

In this guide, we will be using joehoover’s musicgen model as an example. Go to the model Replicate page and select API → Docker. Copy and save the run command.

Configure the Brev Instance

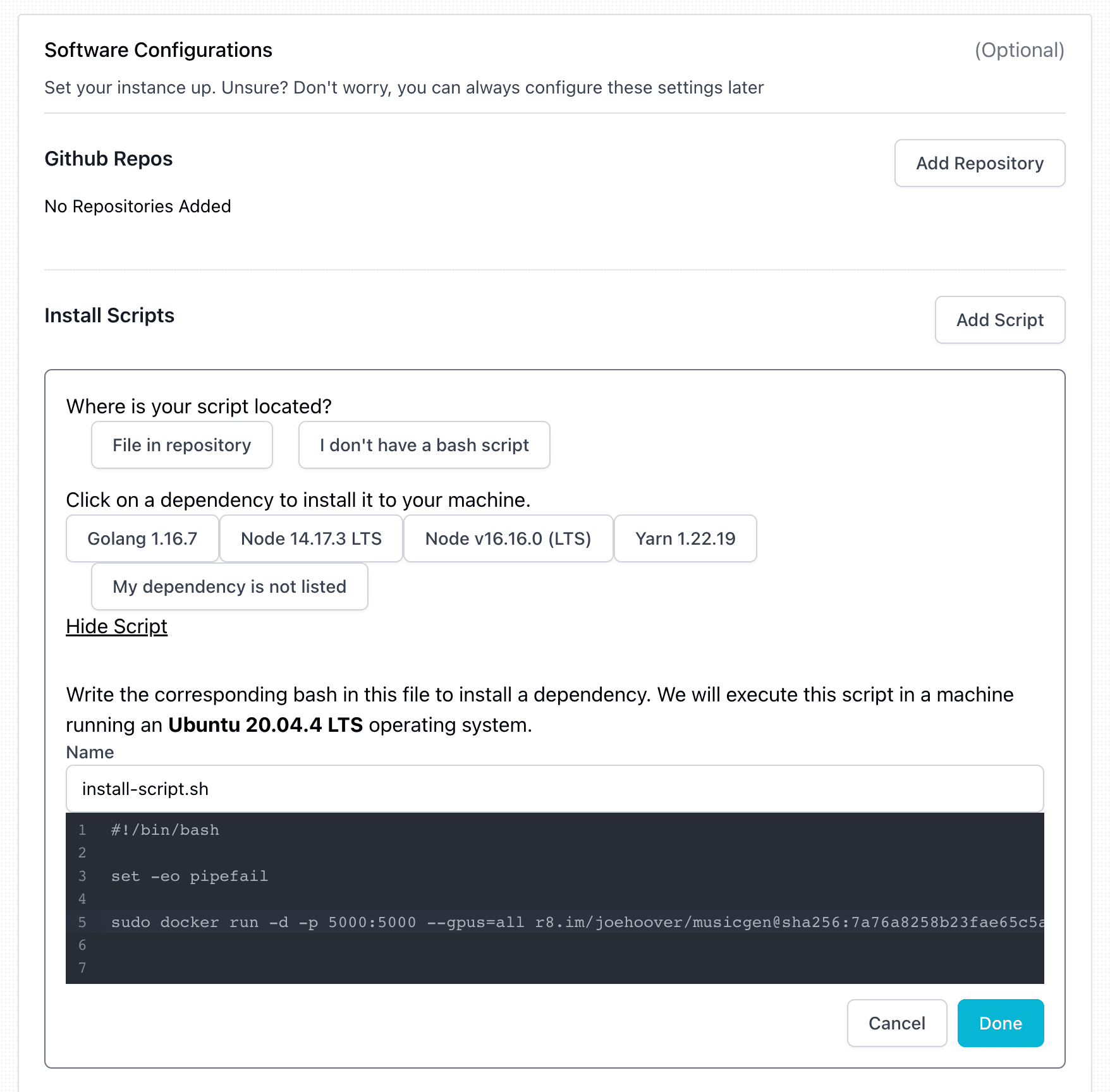

Create a new Brev instance. Add an install script, select “I don’t have a bash script” then “Show script”. In the input field enter the command from above as root by prepending sudo. This will run your model on port 5000 ready for inference. Read more about cog deployments in the cog docs. Finally, click “Done”.

Select GPU and Deploy

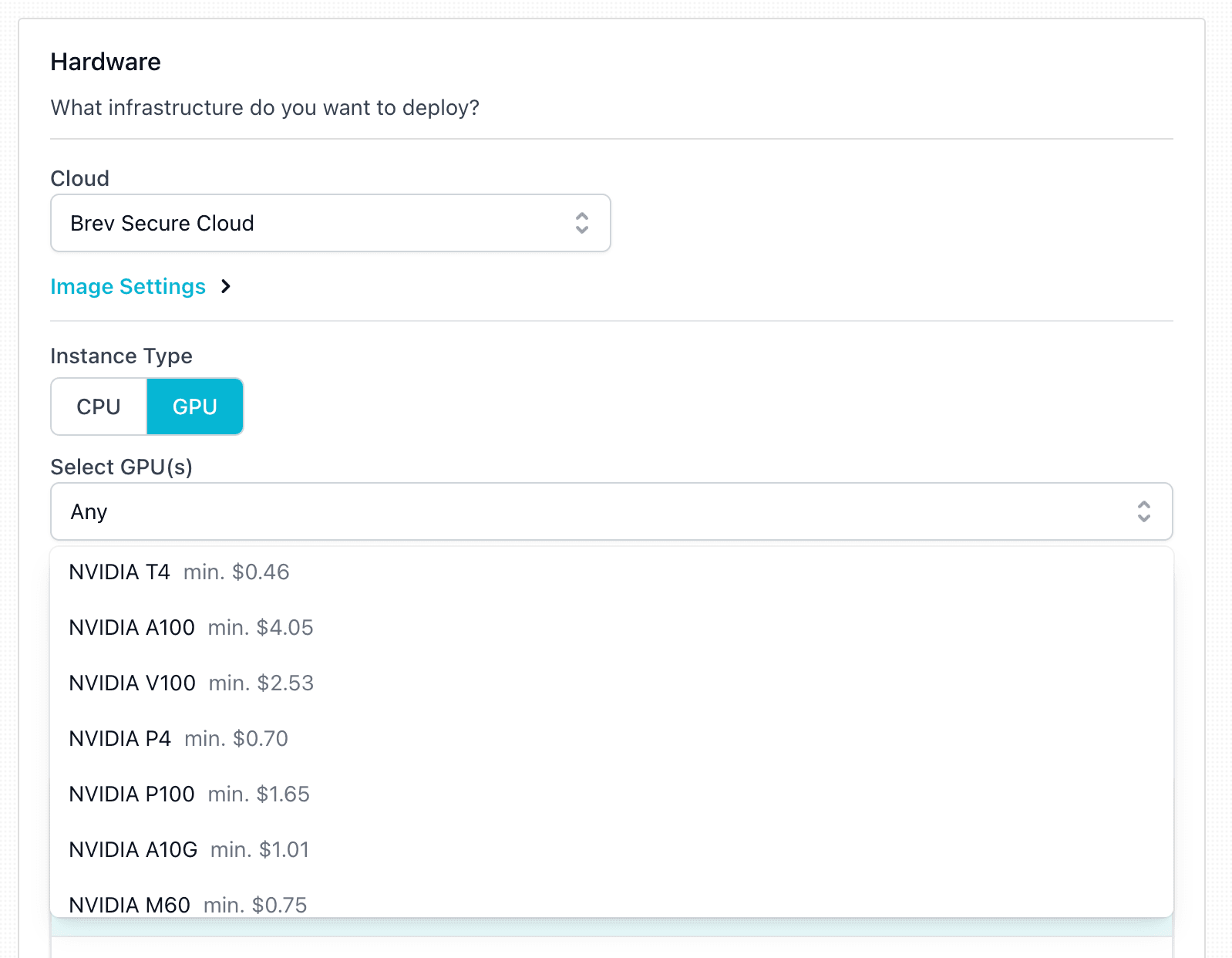

Select the GPU you want to use, name the instance, then create.

Expose your model

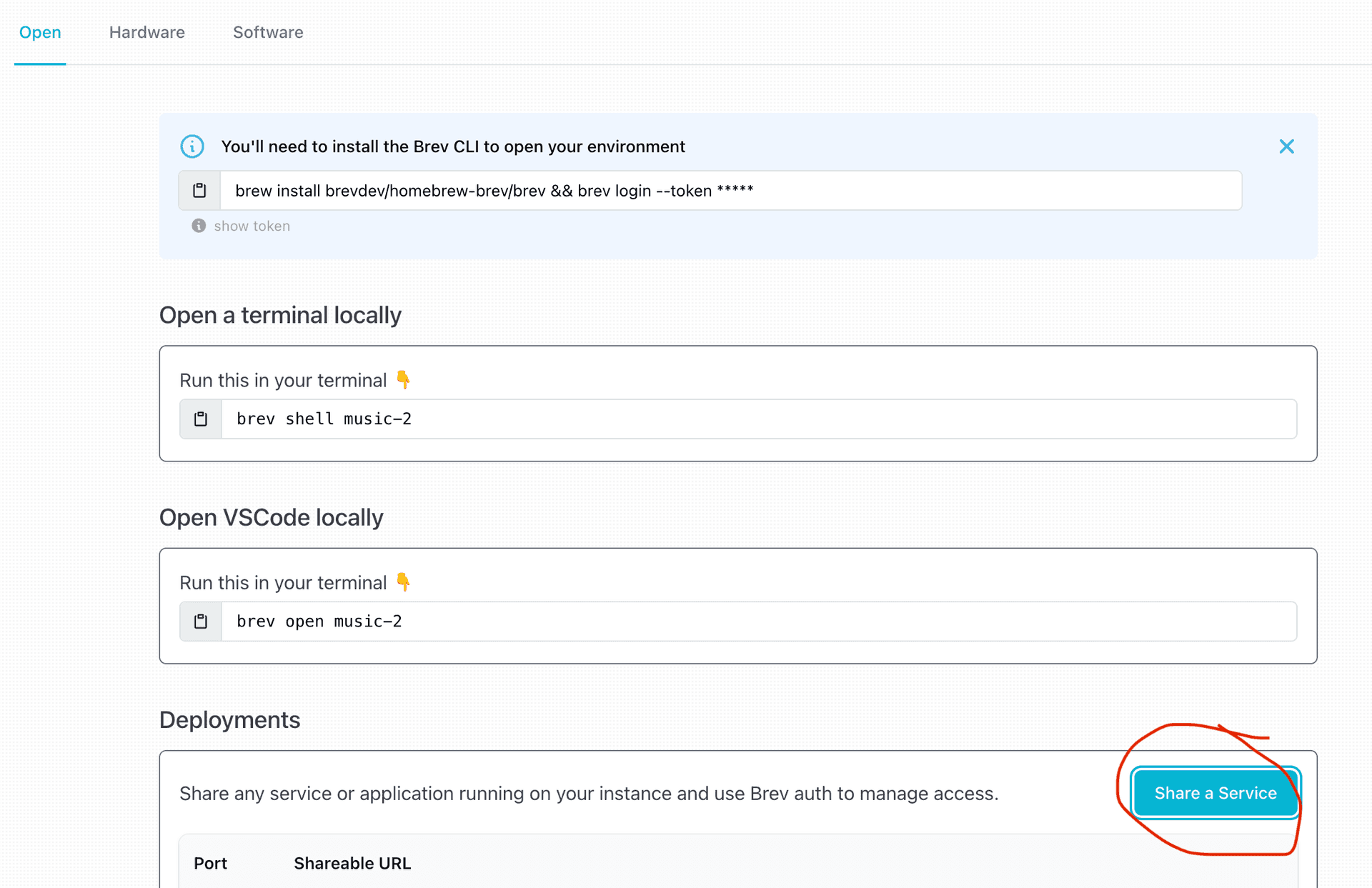

Click “Share a Service” then enter the port your model is running on.

By default, the model API is private so only your Brev user can access it in a browser you are logged in on. To consume the API in your applications you have two choices: API key or public. To create an API key, select “API Key” then “Generate Key”. To make your service totally public, click edit access and toggle on public.

Conclusion

Replicate is a fantastic tool to easily deploy and discover AI/ML models in a pay-per-use manner. However, with a lot of use, it can be an expensive option. We looked at using Brev and Cog to deploy Replicate models on dedicated GPUs on various clouds.